Automated Recognition of Rodent Behavior

Introduction

In my PhD research [1] I addressed the automated analysis of social behavior in rodents. I focused on the question of how we can measure, and thus quantify, social behavior efficiently and objectively using automated measuring tools. One specific goal was to improve such tools so as to enable the analysis of many different interactions in various settings.

All figures on this page are from my dissertation [1].

Why is the study of behavior relevant?

In essence, because we want to understand how the brain works, why it sometimes stops working (e.g., due to neurodegenerative diseases such as Alzheimer’s and Huntington’s), and eventually to find cures for patients suffering from such a disease. These are complicated questions that require fundamental research of the links between the organism, its brain and its behavior [2]. Measuring behavior allows comparing it under different conditions and may thus, together with the study of neuronal functions, reveal the sought relationships [3], [4].

Rodents are not the only organisms whose behavior is studied in this context. There is also research on Drosophila (fruit flies), zebrafish, non-human primates, and of course humans.

Why automating the measurement of behavior?

Measuring behavior involves labeling every behavioral event in multiple hours of video recordings. Labelling manually is time-consuming; it takes a human approximately 3-12 times the duration of the recording. The more complex the behavior to analyse, the more data we need to process to maintain validity. For example, if we aim to analyse long-term behavior patterns over multiple hours or even days, the pure number of behavior events to label prohibits doing so manually within a reasonable time. Automating the labeling alleviates much of this manual effort.

Besides saving time, automated labeling is consistent, produces replicable results even across laboraties and can operate for extended periods of time without suffering from fatigue. Because of its efficiency it allows reanalyzing previous data under new hypotheses, saving both time and animals. Automation therefore contributes directly to the goal of the three Rs [5] – the ethical guideline for animal research which aims to replace animal testing by alternative techniques, to reduce the number of animals, and to refine existing methodology.

What are the limitations of automation?

One of the main limitations of current automated methods is their lack of flexibility when it comes to variations in the environment and experiment settings [6]. In contrast, humans are typically not affected by such variations because of their exceptional perception and interpretive skills. Being able to abstract from irrelevant environmental factors is something machines are not as good at yet.

Recognizing specific interactions in videos

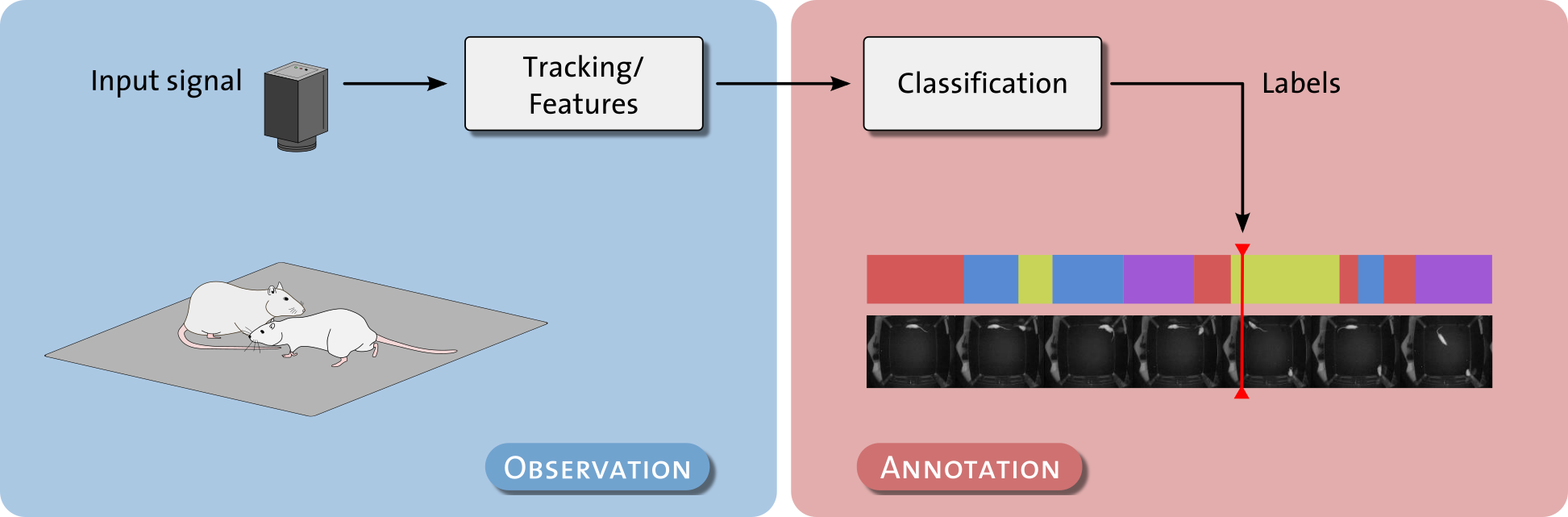

How does automated behavior recognition work?

A standard approach is to first teach the computer how the different behaviors look like in video. By providing a learning algorithm with examples, the computer can build a model for every behavior. These models then allow the computer to distinguish the behaviors in new videos and to label them accordingly. For more information on the technical challenges, I recommend having a look at these papers [7], [8].

What information does the computer use to distinguish the behaviors?

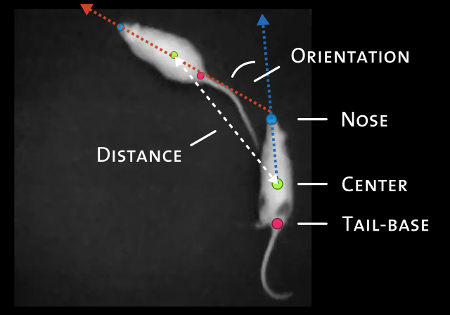

What kind of information is meaningful depends on the behaviors we want to recognize. Typically, we extract features that tell us something about the location, the body posture and the motion of the animals. In our case, we focus on social interactions. For us, the relative orientation and motion as well as the distance between the animals is of particular interest.

To extract meaningful features, the video images go through a series of processing steps. In summary, this is what happens:

- Find and identify the animals in the video and track their locations over time. If there is only one animal, the task of finding and tracking is relatively straightforward. If there are multiple animals, it becomes more difficult. The animals are visually often very similar (they often have the same genetic background) and they like crawling over and under each other, playing rough-and-tumble and generally move fast and somewhat erratic. The younger they are, the more difficult it is. See the video below for an example.

- Derive meaningful features from the animals’ locations, postures and motions. Relevant information could be:

- The locations over time, as they tell us something about the relative trajectories and distances (how fast are they walking and whether they move closer or further away).

- The relative orientation and posture (hunched vs. stretched), as they may tell us how much attention one animal spends toward another.

- Various detailed kinematics such as the position of nose, tail-base and paws, as these could reveal fine-grained interactions such as social grooming, boxing and kicking.

- Once the features have been extracted, we can use them to train the classification algorithm and eventually to recognize interactions in new videos.

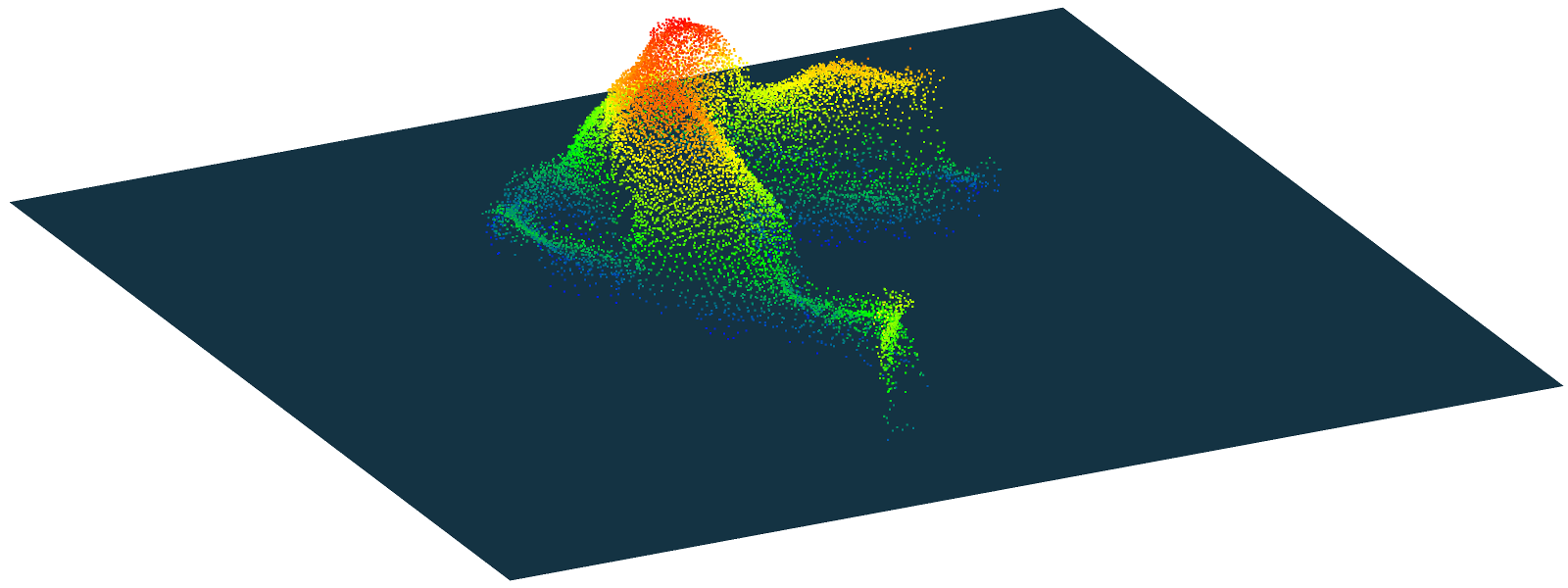

Not all of the features above can be computed reliably with current methods. A lot of ambiguity arises from occlusion and fast motion [9]. Both the view point (typically from above) and the video frame rate (typically 25-30 frames per second) limit the quality of the extracted features. Recently, improvements were made by using multiple cameras from different view points (missing reference) or 3D cameras such as the Kinect [10], [11], [12].

Teaching the computer to recognize interactions

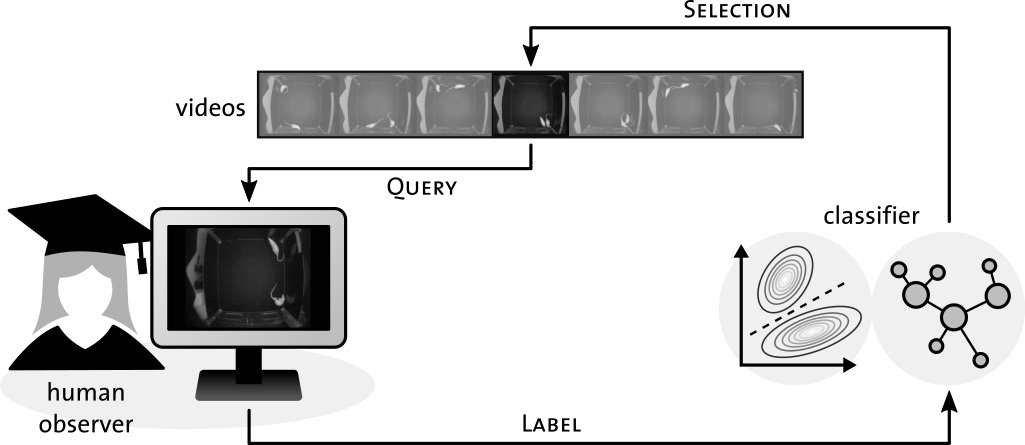

As mentioned earlier, current recognition methods sometimes fail when they are applied to an environment for which they have not been trained. Then we need to retrain it with new labeled examples. In my research I am addressing the question of how we can teach the computer efficiently without too much manual effort.

There are different ways of collecting training examples. We can simply take a bunch of videos and label all interactions that occur in them by hand. This will take some time and because we do not know how many examples of each interaction are contained, we do not know how many videos are sufficient.

In our approach, we instead label the examples and train the computer at the same time. This has the advantage that we can stop when the computer has learned enough and we do not waste valuable time. Furthermore, we can ask the computer which interactions it has trouble recognizing and consequently label more of those to help it improve.

The key questions we would like to answer with our research are:

- How can we reduce the manual effort for the person that provides the examples but at the same time train an accurate classifier?

- Consequently, how can we identify examples that would improve the classifier before we the example is labeled?

- How should we deal with the temporal dimension: an interaction is performed within a certain amount of time but initially (before either human or algorithm have made an attempt to label it) we do not know when it begins and ends. It is just one motion pattern in a long sequence of other patterns. How can we identify an example of an interaction without knowing the temporal extent of the interaction?

References

- [1] -- M. Lorbach, “Automated Recognition of Rodent Social Behavior,” Ph.D. dissertation, Utrecht University, Utrecht, The Netherlands, 2017.

- [2] -- N. Tinbergen, “On Aims and Methods of Ethology,” Zeitschrift für Tierpsychologie, vol. 20, no. 4, pp. 410–433, 1963.

- [3] -- D. J. Anderson and P. Perona, “Toward a Science of Computational Ethology,” Neuron, vol. 84, no. 1, pp. 18–31, 2014.

- [4] -- J. W. Krakauer, A. A. Ghazanfar, A. Gomez-Marin, M. A. MacIver, and D. Poeppel, “Neuroscience Needs Behavior: Correcting a Reductionist Bias,” Neuron, vol. 93, no. 3, pp. 480–490, 2017.

- [5] -- W. M. S. Russell and R. L. Burch, The Principles of Humane Experimental Technique. London: Methuen, 1959.

- [6] -- M. Lorbach, E. I. Kyriakou, R. Poppe, E. A. van Dam, L. P. J. J. Noldus, and R. C. Veltkamp, “Learning to Recognize Rat Social Behavior: Novel Dataset and Cross-Dataset Application,” Journal of Neuroscience Methods, vol. 300, pp. 166–172, Apr. 2018.

- [7] -- S. E. R. Egnor and K. Branson, “Computational Analysis of Behavior,” Annual Review of Neuroscience, vol. 39, no. 1, pp. 217–236, 2016.

- [8] -- A. A. Robie, K. M. Seagraves, S. E. R. Egnor, and K. Branson, “Machine Vision Methods for Analyzing Social Interactions,” Journal of Experimental Biology, vol. 220, no. 1, pp. 25–34, 2017.

- [9] -- M. Lorbach, R. Poppe, E. A. van Dam, L. P. J. J. Noldus, and R. C. Veltkamp, “Automated Recognition of Social Behavior in Rats: The Role of Feature Quality,” in Proc. Conf. Image Analysis and Processing, 2015, pp. 565–574.

- [10] -- W. Hong et al., “Automated Measurement of Mouse Social Behaviors Using Depth Sensing, Video Tracking, and Machine Learning,” Proc. National Academy of Sciences, vol. 112, no. 38, pp. E5351–E5360, 2015.

- [11] -- J. P. Monteiro, H. P. Oliveira, P. Aguiar, and J. S. Cardoso, “A Depth-Map Approach for Automatic Mice Behavior Recognition,” in Proc. Conf. Image Processing (ICIP), 2014, pp. 2261–2265.

- [12] -- T.-H. Ou-Yang, M.-L. Tsai, C.-T. Yen, and T.-T. Lin, “An Infrared Range Camera-Based Approach for Three-Dimensional Locomotion Tracking and Pose Reconstruction in a Rodent,” Journal of Neuroscience Methods, vol. 201, no. 1, pp. 116–123, 2011.